SEO Insights – March 2022 Report

Duplicate Content

60% of the content on the internet is duplicate, according to Gary Illyes, webmaster trends analyst at Google. That’s quite an astonishing number and reflects the challenge that search engines face when trying to make sense of the billions of pages that they discover when crawling the web and trying to deliver the most relevant search results to users.

Duplicate content can take many forms and a significant amount of this 60% most likely comprises websites that have multiple versions able to be crawled by search engines (www and non-www, http and https, etc.), and pages that are duplicated through URL parameters such as sorting and view options.

For example, these are all essentially the same page but all, if not handled correctly, can be crawled and indexed by Google:

- http://website.com/page

- http://website.com/page/

- https://website.com/page/

- http://www.website.com/page/

- https://www.website.com/page/

- http://website.com/page/?sort=asc

- https://website.com/page/?sort=asc

- http://www.website.com/page/?sort=asc

- https://www.website.com/page/?sort=asc

- http://website.com/page?sort=asc

- https://website.com/page?sort=asc

- http://www.website.com/page?sort=asc

- https://www.website.com/page?sort=asc

- https://www.website.com/page?sort=asc&view=grid

Can you spot all of the differences in the URL formats?

Then we have duplicate content that has been syndicated across multiple websites, publication of press releases, products with descriptions that are used by all suppliers, etc.

And finally, and the area which is a little more grey, is content that is very similar.

Sometimes there are only so many ways in which a product, service or answer to a question can be phrased. This often causes pages to appear to be very similar and they can be flagged as duplicate content too.

When so much of the internet is seen as duplicate, it shows how difficult it is to be heard through all of the noise. But it highlights just how critical it is that content needs to be viewed by search engines as unique, engaging and useful for it to then be considered for indexing and ranking.

Content for content’s sake just doesn’t work for search these days. It requires more effort than ever before to give it the best possible chance to appear in the search results. ‘Content is king’ is becoming more and more relevant by the day.

Instability of Search Results

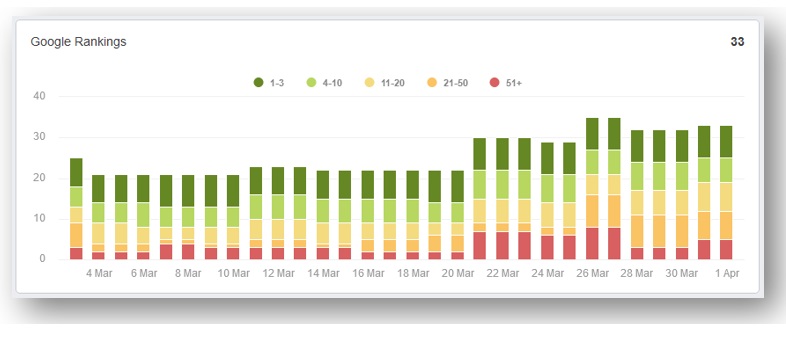

Here at Artemis we have a rank tracker that our clients can access to see how the rankings for their key search terms are performing over time. The rank tracker is helpful as a guide to evaluate overall progress but often it can lead to some concerns from clients when the tracker turns red instead of green.

This is quite normal search ranking behaviour! As Heraclitus, the Greek philosopher once quoted:

“Change is the only constant in life”

This is very true of search engines. In 2020, Google made 4,500 changes to its search results; that’s 12 per day. The majority of changes will have been relatively minor, such as spacing of elements in the results pages, changes in colours, etc., whilst others, such as core updates, will have been quite significant.

In addition to this, Google has several AI algorithms working to further refine the search results, such as RankBrain, neural matching, Bert and very shortly, MUM. AI becomes exponentially more intelligent the more it learns, and so over time we can expect changes in search to appear faster and faster by the day.

We are already seeing this behaviour and it’s why stable search results just don’t exist these days. It’s very rare that the top 10 results don’t change at all. In fact, just searching from a different location, different device, different time of the day or time of the year, the results can change. And if something hits the news, everything changes!

Google’s ranking algorithm wouldn’t and doesn’t work if its results don’t constantly change and evolve. We can, and have to, accept that there will always be changes from month to month in the rankings of keywords, sometimes even on a daily or weekly basis.

But the red days are not a time to panic or get demoralised. It’s quite normal search behaviour. The important thing is to keep working on improving the content, speed, usability and refinement of the pages and adapting them to how Google’s perceived intent is changing over time for each search query.

Google URL Parameters Tool

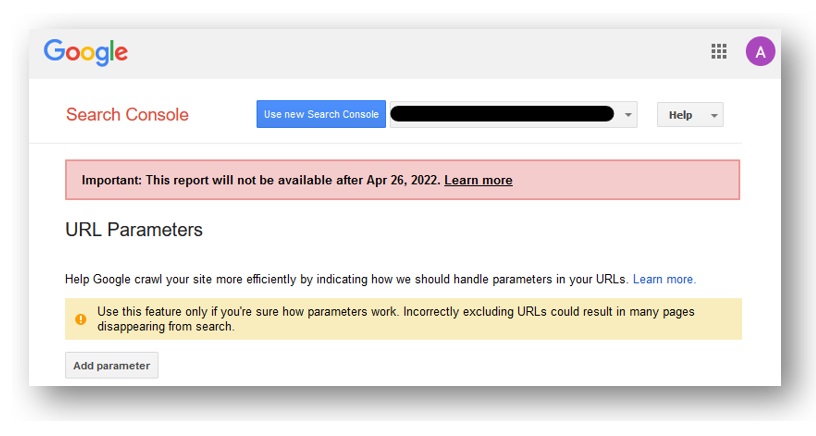

Continuing with the change and duplicate content themes, Google announced in March that on April 26th they will be removing the URL parameter tool from Search Console.

This tool was introduced many years ago to help webmasters handle how Google crawls and indexes pages with URL parameters, for example, parameters which don’t actually make any difference to the actual content of the page, such as those used for sorting results.

The examples above show URLs with an ascending parameter included, for example:

https://www.website.com/page/?sort=asc

But, you can also often make a page display its content, such as products, in a descending format, for example:

https://www.website.com/page/?sort=dsc

The pages are the same, just displayed in a different way for the user. The URL parameter tool was introduced so that you could tell Google to ignore the “sort” parameter as that doesn’t change the content of the page. It was helpful to improve crawling and always having the correct page indexed, and only that page indexed, and not all of the variants.

However, Google has become very clever now at knowing how to handle URL parameters when the website hasn’t explicitly stated how to handle these through no-indexing or blocking crawlers in robots.txt.

It was never a very used tool and webmasters, SEOs and many content management systems are now much better at telling Google what to crawl and what not to crawl on a website.

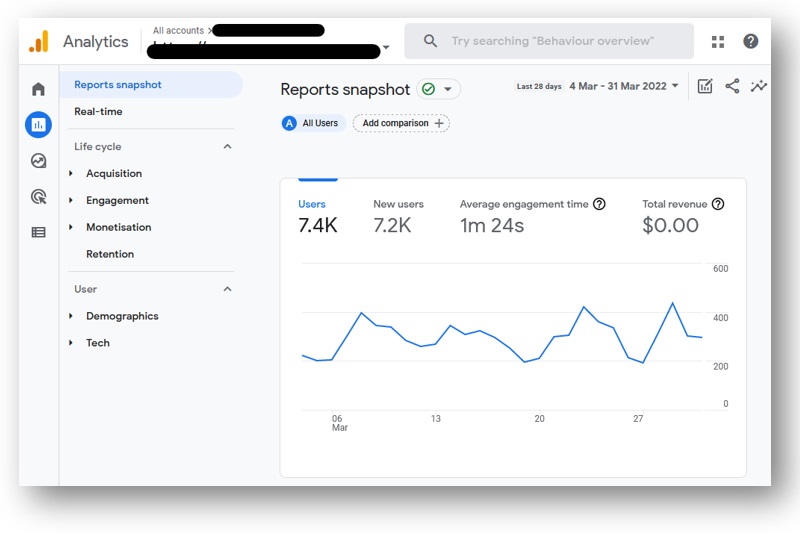

Farewell Universal Analytics, hello Google Analytics 4 (GA4)

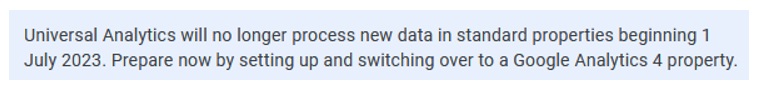

If you’ve logged into your Google Analytics (GA) account recently you may have spotted this new message:

The trusted, faithful and well-used Google Analytics that we have all become so reliant on for so many years is moving on and making way for an all-new version of analytics called GA4.

The announcement by Google in March that GA would stop processing data from July 2023 has had many SEOs in tears. GA4 is currently quite an unloved new product from Google, mainly because it’s so different to what we have been so familiar with for so long.

However, when you start spending time working with GA4, learning how it works and how to generate the reports and data that you need, it’s actually a far superior product to GA. It is also far quicker than GA (a much appreciated improvement) and uses AI extensively to help users by surfacing useful insights based on the data collected.

GA4 comes at a time where there is an increased shift to a cookie-less online world. It has been designed to be able to still collect or interpret data even when a user has chosen to not accept cookies on a website. Google originally stated the following about this:

“Because the technology landscape continues to evolve, the new Analytics is designed to adapt to a future with or without cookies or identifiers. It uses a flexible approach to measurement, and in the future, will include modelling to fill in the gaps where the data may be incomplete.”

Essentially, GA4 uses AI to fill in the gaps when there is missing data. So all is not lost when users are on your website but their cookies are disabled. With the current Google Analytics that data is never gathered and lost forever.

When you compare GA and GA4 data today you’ll notice some slight differences in the numbers in the reports. That’s because GA4 is capturing the data in a different way to GA and so these differences are a consequence of that.

We have already been preparing all of our clients for the changeover to GA4. We set up the GA4 accounts as soon as it was released which means that they’ve been accumulating data all this time. There is no backward compatibility of data with GA so it’s important to have this data now in GA4 for comparison reasons going forward.

Additionally, we will be providing some guides for our clients to become familiar with GA4 in the run up to the switch over. There’s still plenty of time before this happens but it’s good to be prepared.

We look forward to extracting the most of the new features and data available within GA4 to continue to benefit our clients in search.