Crawlability and the basics of SEO

SEO is an ever-elusive acronym, but what is search engine optimisation? In truth, it’s probably the case that no one truly understands how search engines evaluate internet content. It’s a constantly evolving game and the rules are continually being redefined, especially in our new AI-dominated search world.

Even those at Google sometimes struggle to comprehend how their algorithms index a piece of content, especially when there are more than 200 search ranking factors. SEO brings with it new ideas, new knowledge and new concepts. Google’s crawl bots decide what content to show for what search query, it’s just a matter of understanding the language used to communicate this content across the internet. We can discuss technical concepts like search engine indexes, no follow links, mobile-first indexing, sitemap files and server-side rendering all we like – they all have a part to play in the success of a site.

However, this detailed guide serves to focus on the key elements of crawlability and indexability, and the role that they each play in the technical health of your site.

What is Crawlability?

Before Google can index a piece of content, it must first be given access to it so that a search engine crawler (or spider) – the crawl bots that scan content on a webpage – can determine its place in the search engine results pages (SERPs). If Google’s algorithms cannot find your content, it cannot list it.

Think about a time before the internet. Businesses used to advertise and promote their services in resources like the Yellow Pages, far removed from what our current community does – going straight to that Google search bar. Previously, a person could choose to list their phone number for others to find, or choose not to list a number and remain unknown.

It’s the same concept when executing a Google search. Your web page (whether that’s a blog post or otherwise) must offer permission to crawlers so it can be stored in the search engine indexes.

Why is Crawlability Important?

Crawlability is the foundation of your entire SEO strategy. Without it, any kind of top-notch content simply won’t appear in Google search results, regardless of its quality or relevance. Search engine bots need to be able to discover and navigate your website efficiently to understand what your pages are about.

Poor crawlability leads to:

- Incomplete indexing of your website

- Lower organic traffic

- Relevant content failing to gain the traction it deserves

- Reduced visibility in search results

Even the most valuable content provides zero value if search engines can’t find it in the first place. This is why it’s important to address crawlability issues whenever they crop up.

What Is Indexability?

While crawlability refers to search engines’ ability to access and crawl your web pages, indexability determines whether those pages can be added to a search engine’s indexes.

Think of it this way: crawlability is about search engine bots being able to discover your content, while indexability is about whether those pages are eligible to appear in search results after being discovered.

A page might be crawlable but not indexable due to factors like:

- A “noindex” tag in the HTML

- Canonical tags pointing to other pages

- Low-quality or duplicate content that search engines choose not to index

- Blocking the page in robots.txt (which affects both crawlability and indexability)

Robots.txt Files: How Do They Work?

The internet uses a text file called robots.txt. It’s the standard that crawlers live by, and it outlines the permissions a crawler has on a webpage (i.e., what they can and cannot scan). Robots.txt is a part of the Robots Exclusion Protocol (REP), which is a group of web standards that regulate how robot crawlers can access the internet.

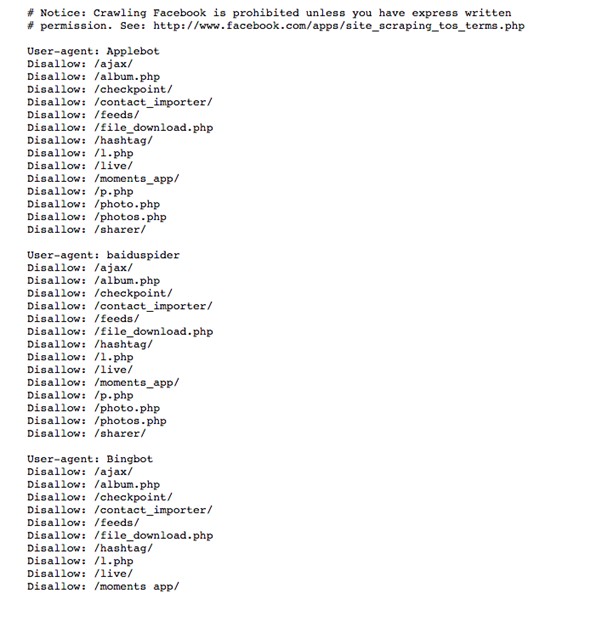

Want an example? Type a website URL into your search browser and include ‘/robots.txt’ at the end. You should find yourself with a text file that outlines the permissions that a crawler has on a website. For example, here is Facebook’s robots.txt file:

So what we see here is that a Bingbot (a crawler used by Bing.com) cannot access any URL that will have ‘/photo.php’. This means that Bing cannot index on its SERPs any users’ Facebook photos unless these photos exist outside of the ‘/photo.php’ subfolder.

By understanding robots.txt files, you can begin to comprehend the first stage a crawler (or spider or Googlebot, it’s all the same thing) goes through to index your website. So, here’s an exercise for you:

Go to your website and search your robots.txt file and become familiar with what you do and don’t allow crawlers to do. Here’s some terminology so you can follow along:

User-agent: The specific web crawler to which you’re giving crawl instructions (usually a search engine).

- Disallow: The command used to tell a user-agent not to crawl a particular URL.

- Allow (Only applicable for Googlebot. Other search engines have different variations of bots and consequently, different commands): The command to tell Googlebot it can access a page or subfolder even though its parent page or subfolder may be disallowed.

- Crawl-delay: How many milliseconds should a crawler wait before loading and crawling page content? Note that Googlebot does not acknowledge this command.

- Sitemap: Used to call out the location of any XML sitemaps associated with this URL.

How Do Crawlability and Indexability Affect SEO?

Crawlability and indexability form the foundation of your SEO efforts. They function as a roadmap for search engines, determining how they discover, interpret, and rank your content for relevant search queries.

When these elements work together effectively:

- Search engines can easily find and understand your content

- Your pages appear in search results for relevant queries

- Users can discover your website through organic search

- Your technical SEO health improves, supporting your broader digital marketing strategy

When either crawlability or indexability issues exist, your site’s visibility suffers regardless of your content quality or other optimisation efforts.

What Do Search Engine Crawlers Look For?

A crawler is looking for specific technical factors on a web page to determine what the content is about and how valuable that content is. When a crawler enters a site, the first thing it does is read the robots.txt file to understand its permissions. Once it has permission to crawl a web page, it then looks at:

- HTTP headers (Hypertext Transfer Protocol): HTTP headers specifically look at information about the viewer’s browser, the requested page, the server and more. Status codes like 200 (success) or 404 (page not found) tell crawlers about page availability.

- Meta tags: These are snippets of text that describe what a web page is about, much like the synopsis of the book. Meta descriptions and title tags help crawlers understand page content.

- Page titles: H1 and H2 tags are read before body copy is. Crawlers will get a sense of what content is by reading these next, creating a logical content hierarchy.

- Images: Images come with alt-text, which is a short descriptor telling crawlers what the image is and how it relates to the content—crucial for both SEO and accessibility for users.

- Body text: Of course, crawlers will read your body copy to help it understand what a web page is all about, analysing keyword usage and topic relevance.

- Internal links: How pages connect to one another helps crawlers understand your site structure and content relationships.

With this information, a crawler can build a picture about what a piece of content is saying and how valuable it is to a real human reading it.

What Affects Crawlability and Indexability?

Several technical factors can impact your site’s crawlability and indexability:

XML Sitemap

An XML sitemap serves as a roadmap for search engine bots, listing all important pages on your website that should be crawled. A well-structured sitemap helps search engines discover new and updated content more efficiently.

Google Search Console and Bing Webmaster Tools allow you to submit your sitemap URL directly, signalling to search engines which pages are most important. John Mueller of Google has repeatedly emphasised the importance of sitemaps, especially for large or complex websites.

Internal Linking Structure

Your internal linking structure helps search engines understand your site hierarchy and content relationships. Contextual links between related pages help crawlers discover content and understand topic relevance.

Poor site structure with orphaned pages (no internal links pointing to them) or deep pages (requiring many clicks from the homepage) can hinder crawlability. A logical URL structure and site architecture improve both bot crawling and user experience.

Technical Issues

Various technical SEO issues can impact crawlability and indexability:

- Server errors: 5xx server errors prevent crawlers from accessing content

- 4xx errors: Pages returning 4xx status codes (such as 404 “not found” errors) waste crawl budget and create dead ends

- Redirect loops: Circular redirects confuse crawlers and prevent page indexing

- Duplicate content: Multiple versions of the same content confuse search engines

- Slow loading times: A page with a poor load time may be crawled less frequently

- Mobile optimisation issues: With mobile-first indexing, non-mobile-friendly pages may suffer

Content Quality

Search engines increasingly evaluate content based on its value to human users:

- Relevance to search intent: Content that addresses what users are actually searching for

- High-quality content: Comprehensive, accurate, and well-structured information

- Redundant or outdated content: Pages with little unique value may be de-prioritised

- Thin content: Pages with minimal substantive information may be excluded from indexing

How to Find Crawlability and Indexability Issues

Identifying potential crawlability and indexability problems requires dedicated technical SEO tools:

Google Search Console

Google Search Console (GSC) provides invaluable insights into how Google views your site:

- The URL Inspection tool shows the current index status of specific pages

- Coverage reports identify pages with errors preventing indexing

- The “Request Indexing” feature allows you to ask Google to recrawl specific URLs

- Core Web Vitals reports highlight performance issues affecting user experience

SEO Tools

Professional SEO audit tools can identify a broader range of issues:

- Log file analysers help you see exactly how bots are crawling your site

- Crawl error reports identify broken links and redirect problems

- Site structure visualisations reveal potential navigation issues

- Technical SEO audit reports provide suggestions for improvement

Manual Checks

Some basic checks you can perform yourself:

- Use the “site:yourdomain.com” search operator to see which pages Google has indexed

- Check your robots.txt for unintentional blocking directives

- Review your site’s mobile versions to ensure proper mobile-first indexing

- Analyse your internal linking to identify orphaned pages

How to Improve Crawlability and Indexability

Enhancing your site’s crawlability and indexability requires a systematic approach:

Optimise Your Technical Foundation

- Fix broken links and redirect chains that waste crawl budget

- Improve page speed with actionable page speed improvements

- Implement a logical site structure with intuitive navigation

- Create an XML sitemap and submit it to search engines

- Optimise robots.txt to ensure important content is accessible

- Address mobile optimisation issues with responsive designs

Enhance Content Quality

- Audit existing content for relevance and comprehensiveness

- Remove or improve thin content pages

- Consolidate redundant content to prevent cannibalisation

- Create contextual internal links between related pages

- Ensure your target landing pages are easily accessible

Monitor and Maintain

- Regularly check Google Search Console for new indexing issues

- Track your indexability rate over time

- Monitor crawl stats to ensure efficient bot traffic

- Use Google Analytics to identify potential content quality issues through metrics like bounce rate and dwell times

- Stay updated on technical SEO best practices through the online community for developers

But Here’s the Thing…

There are more than 200 ranking factors that a crawler will consider. It’s a complicated process, but so long as your technical checks are in place, you have a great chance of obtaining high search engine rankings. Backlinks, for example, are extremely important to determine how authoritative a piece of content is, as is the overall domain authority.

SEO is all about ensuring your content has the correct technical checks in place. It’s about making sure you give a crawler permission in the robots.txt files, that the crawler can easily understand your meta tags, that your page headings are clear enough and relate to the body copy, and that what you provide your readers is valuable and worth reading.

And this last point is quite possibly the most important: value is everything. Because let’s face it, if an algorithm isn’t going to read your content, a human certainly won’t. The best SEO strategy focuses on creating valuable content for human users first, then ensuring search engine bots can properly discover and understand it.

By addressing both crawlability and indexability issues, you create the technical foundation necessary for your content to succeed in organic search, whether driving targeted traffic to product pages on eCommerce sites or key value-driven, evergreen landing pages that support your broader digital marketing goals. The salient point is that technical foundations are key to helping your content rank better – you can’t have one without the other.